Introduction

Feature store platforms help data teams create, manage, and deliver machine learning features consistently across training and serving. In simple terms, they stop the “two versions of the truth” problem where training uses one feature definition and production uses another. They matter because ML systems are now expected to be reliable, faster to ship, and easier to monitor at scale. Feature stores support use cases like real-time fraud detection, product recommendations, customer churn prediction, demand forecasting, and personalization. When evaluating a feature store, focus on offline and online feature support, point-in-time correctness, governance and ownership, feature versioning, lineage, integration with data warehouses and streaming tools, latency, scalability, security controls, monitoring, and operational ease.

Best for: ML engineers, data engineers, data scientists, platform teams, and enterprises building production ML systems that require consistent features across many models.

Not ideal for: teams doing only exploratory notebooks, one-off models, or simple batch scoring where feature reuse and real-time serving are not required.

Key Trends in Feature Store Platforms

- Stronger focus on point-in-time correctness as a non-negotiable requirement

- More real-time and streaming-first feature pipelines for low-latency inference

- Deeper integration with data warehouses and lakehouse ecosystems

- Feature governance becoming a platform priority with ownership, approval, and audit trails

- Feature monitoring and drift detection increasingly expected as built-in capabilities

- Feature discovery and reuse improving through catalogs and semantic metadata

- More demand for standard APIs across offline and online feature access

- Increased emphasis on reproducibility through versioning and feature lineage

- Cost optimization features for storage, compute, and serving workloads

- Tighter security expectations around access control, encryption, and tenant isolation

How We Selected These Tools (Methodology)

- Picked platforms and frameworks recognized for feature store capability and adoption

- Prioritized tools with both offline and online feature patterns or a strong enterprise use story

- Focused on integration breadth across common data and ML ecosystems

- Considered operational maturity: monitoring, governance, and production stability patterns

- Included a balanced mix of open-source, managed, and enterprise-grade offerings

- Evaluated how well the tool supports reuse, discoverability, and team collaboration

- Looked at performance patterns for feature retrieval and serving latency needs

- Considered fit across different company sizes and ML maturity levels

- Ensured the final list covers multiple architectural approaches without duplicates

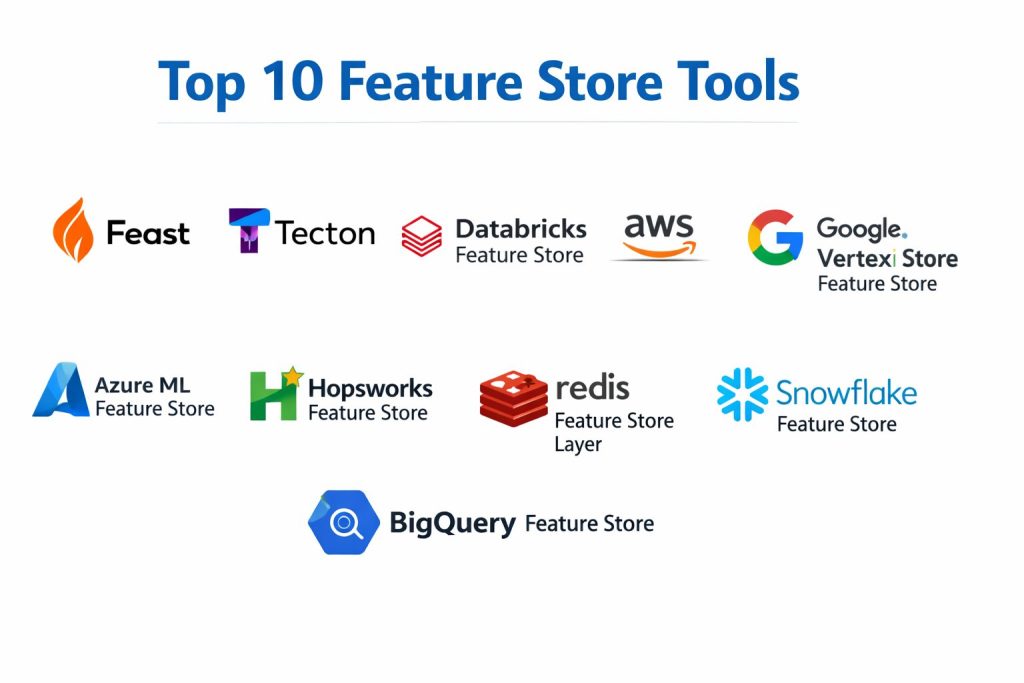

Top 10 Feature Store Platforms

1) Feast

An open-source feature store that helps teams manage and serve features for training and online inference. Often chosen by teams that want flexibility and control over infrastructure.

Key Features

- Supports offline and online feature access patterns (setup dependent)

- Feature definitions that can be reused across models and teams

- Integrates with common storage and serving backends (varies by deployment)

- Helps enforce consistency between training and serving feature values

- Supports feature discovery through registry and definitions

- Works well with batch pipelines and streaming workflows (setup dependent)

- Fits into custom MLOps stacks where teams control components

Pros

- Flexible and infrastructure-agnostic for teams with strong engineering capacity

- Strong community adoption and familiar patterns in modern ML stacks

Cons

- Operational setup and maintenance can be heavy for small teams

- Requires careful architecture decisions to meet latency and reliability goals

Platforms / Deployment

- Windows / macOS / Linux

- Self-hosted

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Varies / N/A

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Feast typically integrates with data warehouses, lakehouse storage, streaming tools, and ML training systems depending on architecture choices.

- Offline stores and warehouses: Varies / N/A

- Online stores: Varies / N/A

- Streaming pipelines: Varies / N/A

- ML frameworks and orchestration: Varies / N/A

Support & Community

Strong open-source community and documentation; enterprise-grade support depends on third-party offerings and internal capability.

2) Tecton

A managed feature platform designed for production ML teams that need reliable feature pipelines, governance, and real-time serving performance.

Key Features

- Managed feature pipelines for offline and online use cases

- Built-in tooling for feature definitions and reuse across teams

- Supports real-time feature serving patterns for low-latency inference

- Strong focus on operational reliability and production readiness

- Workflow patterns for feature monitoring and performance management (varies)

- Helps reduce feature engineering duplication across models

- Integrates into broader data and ML ecosystems (setup dependent)

Pros

- Strong fit for teams needing production-grade real-time feature workflows

- Reduces operational overhead compared to building from scratch

Cons

- Typically better suited for mature teams with clear production needs

- Cost and vendor dependency can be trade-offs for smaller organizations

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Tecton commonly integrates with common warehouse and streaming patterns, and connects to training and serving workflows through platform connectors.

- Warehouses and lakehouse ecosystems: Varies / N/A

- Streaming and real-time pipelines: Varies / N/A

- Model training and deployment systems: Varies / N/A

- Observability integrations: Varies / N/A

Support & Community

Enterprise-focused support and onboarding; community signals vary because it is not primarily community-driven like open source.

3) Databricks Feature Store

A feature store capability designed for teams already building ML systems on a lakehouse platform. Strong for organizations standardizing on unified data and ML workflows.

Key Features

- Central feature discovery and reuse within a lakehouse-style workflow

- Supports offline feature computation and management patterns

- Works closely with notebooks and ML pipelines in the same environment

- Helps align data engineering and ML feature definitions

- Governance patterns via platform controls (varies by setup)

- Scales with large data processing workloads (platform dependent)

- Supports collaboration across teams through shared feature assets

Pros

- Strong fit when your data and ML stack is already standardized on the same platform

- Reduces data movement and simplifies pipeline architecture

Cons

- Less attractive if you do not want platform dependency

- Real-time serving capabilities depend on architecture and setup choices

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Databricks Feature Store integrates best within the Databricks ecosystem and related data tools connected to it.

- Lakehouse storage and processing: Varies / N/A

- Orchestration and CI patterns: Varies / N/A

- Model training and registry integrations: Varies / N/A

- External serving systems: Varies / N/A

Support & Community

Strong enterprise support options and abundant training resources; community knowledge varies by stack and use case.

4) AWS SageMaker Feature Store

A managed feature store option within a broader cloud ML ecosystem. Useful for teams building ML pipelines and serving in a cloud-first environment.

Key Features

- Managed storage and retrieval for features used in ML workflows

- Offline and online feature access patterns (architecture dependent)

- Integration into cloud-native data and ML pipelines

- Supports feature reuse across multiple models and teams

- Designed to reduce mismatch between training and serving features

- Works well with cloud deployment and operational patterns

- Governance and access controls tied to the broader platform (varies)

Pros

- Fits naturally into cloud-first ML and data workflows

- Reduces platform glue work when using the same ecosystem end-to-end

Cons

- Best experience often requires committing to the same ecosystem

- Architecture decisions can still be complex for real-time workloads

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Integrations are strongest inside cloud-native pipelines and services for ETL, streaming, training, and serving.

- Data pipelines and orchestration: Varies / N/A

- Streaming ingestion: Varies / N/A

- Model training and deployment: Varies / N/A

- Observability and governance tooling: Varies / N/A

Support & Community

Strong documentation and cloud community ecosystem; enterprise support quality depends on plan and relationship.

5) Google Vertex AI Feature Store

A managed feature store designed for teams building production ML systems in a cloud ML environment, especially where real-time features and centralized management are important.

Key Features

- Managed feature storage and retrieval patterns

- Designed for consistent feature use across training and serving

- Supports integration with cloud-based data pipelines

- Helps reduce repeated feature engineering by centralizing definitions

- Designed to scale with production ML workloads (usage dependent)

- Governance and access control patterns tied to platform capabilities

- Often used with broader ML lifecycle tooling in the same ecosystem

Pros

- Strong choice for cloud-first ML platforms needing managed operations

- Simplifies integration when the rest of the stack is in the same ecosystem

Cons

- Vendor dependency can be a trade-off if you prefer portability

- Real-world success depends on pipeline design and governance discipline

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Vertex AI Feature Store fits best when paired with cloud data warehousing, streaming, and model deployment in the same environment.

- Warehouse and data processing: Varies / N/A

- Streaming and event data: Varies / N/A

- Training, deployment, and monitoring: Varies / N/A

- Pipeline orchestration tools: Varies / N/A

Support & Community

Strong documentation and community learning, plus enterprise support options that vary by plan.

6) Azure Machine Learning Feature Store

A feature store capability aligned to a cloud ML platform and governance model. Best for teams standardizing on cloud-based ML pipelines and enterprise governance patterns.

Key Features

- Central management of feature definitions and reuse

- Supports consistent features across training and serving (setup dependent)

- Integrates with cloud data services and ML pipelines

- Governance and access control patterns that align with enterprise needs

- Scales with cloud-based compute and storage patterns (usage dependent)

- Helps reduce duplicated feature engineering across projects

- Fits into broader ML lifecycle workflows in the same ecosystem

Pros

- Strong for organizations already using the cloud ML ecosystem end-to-end

- Governance and identity integration can be simpler in enterprise environments

Cons

- Portability can be lower than open-source approaches

- Real-time serving design still requires architecture decisions

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Integrations are strongest with cloud-native data services, orchestration, and model operations.

- Data lake and warehouse services: Varies / N/A

- Pipeline orchestration: Varies / N/A

- Model deployment and monitoring: Varies / N/A

- Identity and access governance: Varies / N/A

Support & Community

Large community ecosystem with enterprise support; quality and depth depend on your exact plan and region.

7) Hopsworks Feature Store

A feature store platform designed around a managed or self-managed approach with emphasis on feature governance, collaboration, and reproducibility.

Key Features

- Feature registry and discovery to drive reuse across teams

- Offline and online feature management patterns (setup dependent)

- Feature versioning and lineage concepts to support reproducibility

- Governance features for ownership and feature approvals (varies)

- Integrates with ML pipelines for training and serving workflows

- Supports batch and streaming feature pipelines (architecture dependent)

- Designed for teams that want a dedicated feature store platform

Pros

- Strong focus on feature management fundamentals and collaboration

- Useful for teams that want feature store as a central platform capability

Cons

- Setup and operations may still require platform engineering

- Ecosystem fit depends on your preferred data stack and architecture

Platforms / Deployment

- Web / Windows / macOS / Linux

- Cloud / Self-hosted / Hybrid

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Hopsworks often integrates with data processing, orchestration, and ML training systems depending on architecture choices.

- Warehouses and lakehouse storage: Varies / N/A

- Streaming ingestion: Varies / N/A

- Training and registry systems: Varies / N/A

- Observability and governance tooling: Varies / N/A

Support & Community

Support offerings vary by plan; community and documentation are generally strong for feature store-focused teams.

8) Redis (as an Online Feature Store Layer)

A popular in-memory datastore often used as the online serving layer for low-latency feature retrieval. It is typically combined with an offline store and feature pipeline tooling.

Key Features

- Very fast key-based retrieval for real-time inference needs

- Common choice for online feature serving when latency is critical

- Supports scalable caching and storage patterns (setup dependent)

- Works well as a serving layer behind feature store definitions

- Integrates with many application and ML serving stacks

- Useful for high-throughput workloads with careful design

- Often used as part of a broader feature store architecture

Pros

- Strong performance for online feature retrieval with low latency

- Widely understood and supported across engineering teams

Cons

- Not a complete feature store by itself

- Requires strong pipeline discipline to keep online and offline features consistent

Platforms / Deployment

- Windows / macOS / Linux

- Cloud / Self-hosted / Hybrid

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Redis integrates broadly as an online store component in feature store architectures.

- Offline store pairing: Varies / N/A

- Streaming ingestion pipelines: Varies / N/A

- Serving frameworks and APIs: Varies / N/A

- Observability and alerting systems: Varies / N/A

Support & Community

Strong community and extensive documentation; enterprise support options vary by plan and vendor offering.

9) Snowflake (as a Feature Store Foundation Pattern)

A data platform often used as the offline backbone for feature computation, storage, and governance. Teams commonly build feature store patterns on top of it using definitions, pipelines, and serving layers.

Key Features

- Strong offline feature computation and storage patterns (workflow dependent)

- Central data governance and access control options (platform dependent)

- Scales well for large analytic workloads and feature generation

- Supports feature reuse through curated tables and definitions (team dependent)

- Strong collaboration patterns for data teams

- Works well when paired with an online serving layer

- Often used as part of a broader feature store architecture

Pros

- Strong choice for offline feature consistency and governance workflows

- Reduces duplication when features are centralized in one data platform

Cons

- Not a complete feature store by itself

- Real-time serving requires additional components and careful design

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

Snowflake commonly integrates with orchestration, transformation layers, and serving systems used for ML pipelines.

- Data transformation tooling: Varies / N/A

- Orchestration and scheduling: Varies / N/A

- Online serving layer pairing: Varies / N/A

- ML training handoffs: Varies / N/A

Support & Community

Large enterprise community and support ecosystem; implementation patterns vary widely by organization.

10) BigQuery (as a Feature Store Foundation Pattern)

A data platform frequently used as an offline feature store base, where teams compute, store, and govern features before serving them through online layers.

Key Features

- Scalable offline feature computation and storage (workflow dependent)

- Strong fit for feature pipelines tied to analytics and event data

- Works well with scheduled and batch feature generation patterns

- Supports governance through platform access controls (varies)

- Helps centralize feature definitions in curated datasets (team dependent)

- Commonly paired with an online store for low-latency inference

- Works well with broader cloud data and ML ecosystems

Pros

- Strong offline scalability for feature computation and storage

- Good fit for event-driven analytics that feed ML pipelines

Cons

- Not a complete feature store on its own

- Real-time feature serving needs additional architecture components

Platforms / Deployment

- Web

- Cloud

Security & Compliance

- SSO/SAML, MFA, encryption, audit logs, RBAC: Not publicly stated

- SOC 2, ISO 27001, GDPR, HIPAA: Not publicly stated

Integrations & Ecosystem

BigQuery integrates well with cloud data processing, orchestration, and downstream ML tooling.

- Data pipelines and transformations: Varies / N/A

- Online serving layer pairing: Varies / N/A

- Training and deployment systems: Varies / N/A

- Monitoring and governance patterns: Varies / N/A

Support & Community

Strong documentation and a large cloud community; enterprise support options vary by plan.

Comparison Table (Top 10)

| Tool Name | Best For | Platform(s) Supported | Deployment | Standout Feature | Public Rating |

|---|---|---|---|---|---|

| Feast | Flexible open-source feature store stacks | Windows, macOS, Linux | Self-hosted | Infrastructure-agnostic feature definitions | N/A |

| Tecton | Production real-time features at scale | Web | Cloud | Managed real-time feature pipelines | N/A |

| Databricks Feature Store | Lakehouse-centered ML feature workflows | Web | Cloud | Unified data and ML feature reuse | N/A |

| AWS SageMaker Feature Store | Cloud-native ML feature management | Web | Cloud | Tight integration with cloud ML ecosystem | N/A |

| Google Vertex AI Feature Store | Managed feature store for cloud ML stacks | Web | Cloud | Centralized managed features for serving | N/A |

| Azure Machine Learning Feature Store | Enterprise governance with cloud ML workflows | Web | Cloud | Identity and governance alignment | N/A |

| Hopsworks Feature Store | Dedicated feature platform with governance focus | Web, Windows, macOS, Linux | Cloud / Self-hosted / Hybrid | Feature registry and collaboration | N/A |

| Redis (as an Online Feature Store Layer) | Low-latency online feature serving | Windows, macOS, Linux | Cloud / Self-hosted / Hybrid | Fast online retrieval | N/A |

| Snowflake (as a Feature Store Foundation Pattern) | Offline feature computation and governance | Web | Cloud | Scalable offline feature foundation | N/A |

| BigQuery (as a Feature Store Foundation Pattern) | Offline feature pipelines for event-driven data | Web | Cloud | Scalable analytics-driven features | N/A |

Evaluation & Scoring of Feature Store Platforms

Weights: Core features 25%, Ease 15%, Integrations 15%, Security 10%, Performance 10%, Support 10%, Value 15%.

| Tool Name | Core (25%) | Ease (15%) | Integrations (15%) | Security (10%) | Performance (10%) | Support (10%) | Value (15%) | Weighted Total (0–10) |

|---|---|---|---|---|---|---|---|---|

| Feast | 8.5 | 6.5 | 8.0 | 6.0 | 7.5 | 8.0 | 9.0 | 7.78 |

| Tecton | 9.0 | 8.0 | 8.5 | 6.5 | 8.5 | 7.5 | 6.5 | 8.01 |

| Databricks Feature Store | 8.5 | 8.0 | 8.0 | 6.5 | 8.0 | 8.0 | 7.0 | 7.90 |

| AWS SageMaker Feature Store | 8.0 | 7.5 | 8.0 | 6.5 | 8.0 | 8.0 | 7.0 | 7.72 |

| Google Vertex AI Feature Store | 8.0 | 7.5 | 8.0 | 6.5 | 8.0 | 8.0 | 7.0 | 7.72 |

| Azure Machine Learning Feature Store | 8.0 | 7.5 | 8.0 | 6.5 | 7.5 | 8.0 | 7.0 | 7.67 |

| Hopsworks Feature Store | 8.5 | 7.0 | 8.0 | 6.5 | 7.5 | 7.5 | 7.5 | 7.76 |

| Redis (as an Online Feature Store Layer) | 6.5 | 7.5 | 8.0 | 6.0 | 9.0 | 8.5 | 8.0 | 7.56 |

| Snowflake (as a Feature Store Foundation Pattern) | 6.5 | 8.0 | 8.0 | 6.5 | 8.0 | 8.0 | 7.0 | 7.32 |

| BigQuery (as a Feature Store Foundation Pattern) | 6.5 | 8.0 | 8.0 | 6.5 | 8.0 | 8.0 | 7.0 | 7.32 |

How to interpret the scores:

- These scores compare tools only within this list and reflect typical patterns.

- A higher total suggests broader fit across many teams, not a universal winner.

- Some entries are foundation patterns, so “core” may score lower while integrations score higher.

- Security scoring is limited where details are not publicly stated and depends on your environment.

- Always validate with a pilot using your actual offline and online feature needs.

Which Feature Store Platform Is Right for You?

Solo / Freelancer

If you are learning or building small production systems, Feast can be a strong choice because it teaches the core concepts and lets you assemble your own stack. If your goal is to deliver quickly without operating many moving parts, a managed platform option may be easier, but cost and complexity must be justified by real production needs.

SMB

SMBs often need a balance of control and time-to-value. Feast can work well if you have strong engineering and want flexibility. If you are already committed to a lakehouse platform, Databricks Feature Store can reduce integration friction. For teams with real-time requirements, Tecton may reduce operational burden, but you should confirm the long-term cost model.

Mid-Market

Mid-market teams usually need governance, reuse across models, and stable pipelines. Databricks Feature Store is strong when your stack is centered on the same platform. Hopsworks Feature Store can be a good fit if you want feature store as a dedicated platform capability. For cloud-first ecosystems, managed options like AWS SageMaker Feature Store, Google Vertex AI Feature Store, and Azure Machine Learning Feature Store can simplify identity and pipeline integration.

Enterprise

Enterprises typically care most about reliability, governance, and reusable features across dozens of models. Tecton can be a strong option for mature real-time production needs. If your organization is standardized on one major cloud or lakehouse ecosystem, choosing the aligned managed feature store can reduce organizational friction. Enterprises should also emphasize ownership workflows, access governance, auditability, and operational monitoring.

Budget vs Premium

Budget-first stacks often use Feast with a carefully chosen offline store and an online serving layer like Redis. Premium solutions often focus on managed platforms that reduce operational work, but the cost must be matched to business value and criticality.

Feature Depth vs Ease of Use

If your team wants maximum control and portability, Feast tends to score well, but requires more engineering effort. If ease of onboarding and production operations matter most, managed platforms can reduce burden, provided your requirements fit the platform model.

Integrations & Scalability

If you already run a warehouse-first or lakehouse-first organization, Databricks Feature Store, Snowflake patterns, or BigQuery patterns can simplify offline feature pipelines. For serving at low latency, pairing an online layer like Redis can help, but you must design strong consistency workflows between offline and online.

Security & Compliance Needs

Treat security as a shared responsibility across tool, storage, and pipeline environment. If compliance details are not publicly stated, do not assume them. Instead, validate identity integration, role-based access, audit trails, encryption, and governance controls through your internal security review process.

Frequently Asked Questions (FAQs)

1. What problem does a feature store solve most clearly?

It prevents training-serving mismatch and reduces duplicated feature engineering. It makes features reusable, consistent, and easier to govern across many models.

2. Do I always need both offline and online features?

No. Batch scoring can work with offline-only features. Online features matter when you need low-latency inference, personalization, or real-time decisioning.

3. What is point-in-time correctness and why does it matter?

It ensures features for training are computed using only data available at that time, preventing data leakage. Without it, models look better in testing but fail in production.

4. Is a feature store the same as a data warehouse or lake?

No. Warehouses and lakes store raw and curated data. A feature store adds feature definitions, governance, reuse, and consistent access for training and serving.

5. What are common mistakes when implementing a feature store?

Skipping ownership rules, not standardizing naming conventions, ignoring point-in-time correctness, and building features per model instead of shared definitions.

6. How do teams keep offline and online features consistent?

They use shared transformations, standardized pipelines, and validation checks. Strong monitoring and clear data contracts are essential for reliability.

7. Can I use Redis alone as my feature store?

Redis is usually an online serving layer, not a full feature store. You still need feature definitions, offline computation, governance, and reproducibility patterns.

8. How long does it take to implement a feature store in production?

It depends on your ML maturity and data stack. A small pilot can be quick, but full governance, reuse, and monitoring usually take disciplined iteration.

9. How do I choose between open-source and managed platforms?

Open-source offers flexibility and portability but needs more engineering. Managed platforms reduce operational overhead but can increase vendor dependency and cost.

10. What should I test in a pilot before committing?

Test one end-to-end use case: feature definition, offline generation, online serving if needed, latency, reliability, and integration with your model training and deployment workflow.

Conclusion

Feature store platforms become valuable when your organization moves from one-off models to a portfolio of production ML systems that must stay consistent over time. The right choice depends on where you run your data stack, whether you need real-time serving, and how much platform engineering you can support. Open approaches like Feast provide flexibility and portability, especially when paired with a clear offline store and a dedicated online serving layer. Managed platforms can reduce operational complexity, but they work best when your team is already committed to a specific ecosystem and has strict production requirements. A practical next step is to shortlist two or three tools, pilot one real model workflow, confirm point-in-time correctness, validate latency needs, and finalize governance rules for feature ownership and reuse.