In the modern digital economy, data has evolved from being a simple byproduct of business into the primary engine of global innovation. However, many organizations struggle because their data pipelines are frequently clogged by manual processes and fragmented communication. Consequently, the industry is witnessing a massive transition toward DataOps, a methodology that applies the agility of DevOps to the complexities of the data lifecycle.

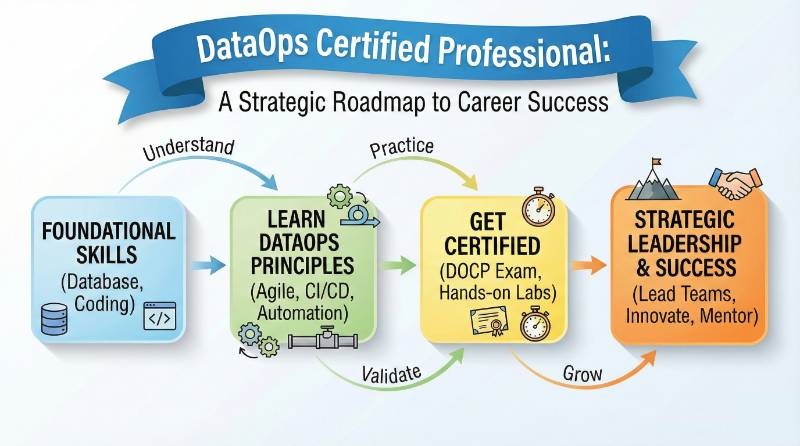

For software engineers and technical managers, mastering these automated workflows is no longer optional; it is a strategic necessity. Specifically, the DataOps Certified Professional (DOCP) has emerged as the gold standard for validating this expertise. By earning this credential, you move beyond being a traditional developer and become an architect of high-speed, high-quality information systems. This guide provides a comprehensive breakdown of how to master this domain and future-proof your career.

Choose Your Path: 6 Learning Tracks for the Modern Era

Understanding the broader ecosystem is the first step toward specialized success. Depending on your career goals, you might find yourself gravitating toward one of these six primary disciplines.

1. The DevOps Path

DevOps remains the bedrock of all modern software engineering. It focuses on breaking down silos between developers and IT operations through the use of CI/CD and automated testing. Furthermore, it establishes the cultural mindset of shared responsibility and continuous delivery.

2. The DevSecOps Path

In an era of constant cyber threats, security must be integrated directly into the automated pipeline. This track teaches you how to embed security protocols at every stage, from code to production. In addition, it ensures that your high-speed deployments do not create vulnerabilities for the enterprise.

3. The SRE (Site Reliability Engineering) Path

SREs are essentially software engineers who handle operations problems with code. They prioritize system uptime, scalability, and the concept of an “error budget.” If you are passionate about building systems that are both reliable and highly available, this path offers the technical depth you require.

4. The AIOps / MLOps Path

This represents the intersection of artificial intelligence and operations. While MLOps focuses on the lifecycle of machine learning models, AIOps uses AI to manage and monitor IT infrastructure. Specifically, these tracks are ideal for professionals working on complex, data-heavy AI transformations.

5. The DataOps Path

Our primary focus today is the DataOps track. This discipline applies the principles of DevOps specifically to data management. It ensures that the flow of information from the source to the final consumer is automated, high-quality, and completely transparent.

6. The FinOps Path

As cloud bills continue to rise, financial accountability has become a top priority. FinOps practitioners manage the variable spending model of the cloud. Consequently, they help organizations balance high-performance computing with cost-efficiency.

Role → Recommended Certifications Mapping

To help you decide which path to take, refer to this mapping that aligns your current professional role with the most impactful certifications.

| Current Professional Role | Recommended Certification Journey |

| DevOps Engineer | Certified DevOps Professional (CDP) |

| SRE / Systems Engineer | SRE Certified Professional (SREC) |

| Platform Engineer | Certified DevOps Architect (CDA) |

| Cloud Engineer | Cloud-Specific DevOps Specialist (AWS/Azure) |

| Security Engineer | DevSecOps Certified Professional (DSOCP) |

| Data Engineer | DataOps Certified Professional (DOCP) |

| FinOps Practitioner | Certified FinOps Professional |

| Engineering Manager | Certified DevOps Manager (CDM) |

Complete Certification Master Table

The following table provides a high-level view of the major professional tracks offered by DevOpsSchool and its partner network.

| Track | Level | Who it’s for | Prerequisites | Skills Covered | Order |

| DevOps | Foundation | Beginners | Basic Linux | Git, Docker, CI/CD | 1st |

| DevOps | Professional | Engineers | 2+ yrs exp | Kubernetes, Terraform | 2nd |

| DataOps | Professional | Data Pros | SQL / Python | Airflow, Kafka, dbt | Spec |

| SRE | Professional | Ops Experts | DevOps basics | Observability, SLAs | Adv |

| DevSecOps | Professional | Security Pros | CI/CD basics | Vault, SonarQube | Adv |

| MLOps | Professional | Data Scientists | Python, ML | Model CI/CD, MLFlow | Spec |

| AIOps | Professional | Managers/SREs | Ops knowledge | ELK, Prometheus, AI | Spec |

Deep Dive: DataOps Certified Professional (DOCP)

What it is

The DataOps Certified Professional (DOCP) is a practitioner-level program that validates your technical ability to manage the automated data lifecycle. It confirms your mastery of building pipelines that are resilient, scalable, and compliant with modern standards.

Who should take it

This certification is designed for Data Engineers, Software Developers, and Database Administrators who are looking to move away from manual work. Furthermore, it is a significant asset for Technical Managers who need to oversee data-driven transformations.

Skills you’ll gain

- Workflow Orchestration: You will master tools like Apache Airflow to schedule and manage complex data dependencies.

- Real-Time Data Streaming: You will learn to use Apache Kafka for high-velocity data ingestion and processing.

- Quality Automation: You will implement rigorous testing frameworks that catch errors before they reach the data warehouse.

- Infrastructure as Code: You will gain the ability to deploy data environments using modern tools like Docker and Kubernetes.

- Data Governance: You will understand how to maintain data lineage, security, and privacy across the entire pipeline.

Real-world projects you should be able to do

- Construct an end-to-end automated ETL pipeline that processes raw data into a structured cloud warehouse.

- Build a comprehensive observability dashboard that monitors the health and accuracy of all data flows.

- Implement a “Data-as-Code” system that utilizes Git for version-controlling your data schemas.

- Develop a self-healing ingestion system that automatically retries failed data jobs without human intervention.

The DOCP Preparation Plan

Success in this certification depends on your preparation strategy. Refer to these timelines based on your current experience:

- Fast Track (7–14 days): Ideal for those with a strong DevOps background. Spend 5 hours daily on the specific syntax of Airflow and Kafka. Focus your energy on practical labs that simulate pipeline failures.

- Professional Path (30 days): This is the best choice for working engineers. Dedicate the first two weeks to data transformation and storage. Subsequently, spend the final two weeks on orchestration, security, and governance.

- Mastery Roadmap (60 days): Recommended for those transitioning from non-automated backgrounds. Spend the first month mastering SQL and Linux. Ultimately, use the second month to dive deep into cloud-native automation and orchestration.

Common Mistakes to Avoid

- Neglecting Data Quality: Remember that speed is useless if the data is inaccurate. Always prioritize automated validation gates.

- Manual Production Fixes: You must never fix a data issue manually in production. Instead, ensure all changes are committed via code in your repository.

- Over-Engineering: Avoid building complex streaming systems if a simple daily batch process meets the business requirement.

- Ignoring Observability: A pipeline is only effective if you know it is working. Always implement robust monitoring from the very start.

Best Next Certification After This

After achieving your DOCP, you should consider the MLOps Certified Professional. This allows you to bridge the gap between your automated data pipelines and the deployment of production-grade machine learning models.

Next Certifications to Take: Expanding Your Growth

Once you have mastered the DataOps domain, you should consider advancing in these three directions:

- Same Track (Advanced Specialization): Pursue the Certified DataOps Architect to learn how to design complex, enterprise-wide data strategies.

- Cross-Track (Broadening Expertise): Consider the SRE Certified Professional to apply reliability engineering principles to your data stacks.

- Leadership (Growth): Look into the Certified DevOps Manager (CDM) to learn how to lead high-performing teams and manage technical digital transformations.

Top Training & Certification Support Institutions

Selecting the right partner for your education is critical for your long-term success. These institutions are recognized leaders in providing support for the DataOps Certified Professional (DOCP).

- DevOpsSchool: This institution is a global leader in technical training, offering immersive, tool-centric courses with lifetime access to materials. Their curriculum is heavily focused on hands-on labs and real-world project scenarios, making them the top choice for working professionals.

- Cotocus: Known for its boutique training style, Cotocus provides high-quality lab environments that simulate complex enterprise challenges. Their instructors are industry experts who prioritize practical application, ensuring that students are ready for the technical realities of the workplace.

- Scmgalaxy: This is a massive, community-driven platform that provides thousands of resources, tutorials, and expert-led sessions. They offer extensive support for students, helping them navigate the complexities of SCM and DevOps with ease and clarity.

- BestDevOps: They specialize in intensive bootcamps designed to take an engineer from a beginner to an expert in a short timeframe. Their focus is on high-impact, job-ready skills that can be applied to enterprise projects right away.

- devsecopsschool: While they focus on security, their DataOps integration courses are world-class. They teach you how to build pipelines that are not only fast but also completely secure from external threats and internal vulnerabilities.

- sreschool: This institution focuses on the reliability aspect of the data lifecycle. They are the go-to choice for learning how to make your DataOps systems self-healing and highly available for global users at any scale.

- aiopsschool: As data and AI converge, this school helps you stay ahead of the curve. They provide specialized training on using AI to monitor, optimize, and secure your automated data workflows for better business outcomes.

- dataopsschool: A dedicated branch that focuses purely on the data lifecycle. Their curriculum is deep and covers everything from data governance to advanced pipeline orchestration for big data environments and cloud-native stacks.

- finopsschool: For the cost-conscious professional, this school is essential. They teach you how to manage the massive cloud costs often associated with big data and DataOps projects effectively without sacrificing technical performance.

Frequently Asked Questions (Career & General)

- Is DataOps just a new name for Data Engineering?Not exactly. While Data Engineering is about building the pipeline, DataOps is about the automation, quality, and speed of that entire process.

- How much coding is required for this career?You do not need to be a software architect, but a solid command of SQL and Python is definitely required for automation and scripting tasks.

- Is the DOCP certification recognized globally?Yes, the certification is highly valued in tech-heavy regions like India, Europe, and North America by major global firms and startups alike.

- Will this certification lead to a salary hike?While results vary, many professionals see a 20% to 50% increase in their market value after gaining specialized automation credentials in the data niche.

- Can a manager benefit from taking the DOCP?Absolutely. Managers who understand the technical details of DataOps are better equipped to build efficient teams and set realistic performance goals.

- Does the course cover cloud-native tools?Yes, the program typically includes hands-on labs for major cloud providers like AWS and Azure, focusing on platform-agnostic tools for maximum portability.

- Is there a lot of math involved in DataOps?DataOps is more about engineering and automation than pure mathematics, though a basic understanding of data structures is certainly helpful.

- What is the “DataOps Manifesto”?It is a set of 18 principles that prioritize automation, quality, and collaboration over manual processes and siloes in the data lifecycle.

- How long is the final certification exam?The exam usually lasts about 2 to 3 hours and includes both conceptual questions and practical, hands-on lab assessments to verify your skills.

- Do I need to be a DevOps expert first?While it helps, the DOCP course is designed to teach you the necessary DevOps principles as they apply specifically to the data environment.

- How does DataOps support AI initiatives?AI models are only as good as the data they consume. Therefore, DataOps is the foundation that makes reliable, timely, and accurate AI possible.

- Are there any community groups for students?Yes, institutions like Scmgalaxy and DevOpsSchool offer vibrant forums and alumni groups for ongoing networking, support, and job opportunities.

FAQs: DataOps Certified Professional (DOCP)

- What is the core objective of the DOCP?The goal is to turn you into an expert who can deliver high-quality, reliable data to the business faster and more efficiently.

- Which specific tools will I learn to use?You will primarily focus on industry standards such as Apache Airflow, Kafka, Docker, Kubernetes, and various cloud data warehouse environments.

- Is the certification exam conducted online?Yes, most providers offer remote proctoring so you can take the certification exam from the convenience of your home or office.

- How difficult is the DOCP certification?It is a professional-level certification, meaning it is challenging. However, with consistent lab practice and study, it is very achievable for most engineers.

- Does the curriculum cover data privacy?Yes, a significant part of the course is dedicated to ensuring data remains secure and compliant with global privacy laws like GDPR and HIPAA.

- Will I receive a digital badge upon passing?Yes, successful candidates receive a globally recognized certificate and a digital badge to share on professional networks like LinkedIn.

- Is there a prerequisite for the DOCP?A basic understanding of databases and Linux command-line tools is generally recommended before you begin this professional track.

- Does it cover real-time data ingestion?Yes, mastering real-time data streaming and processing is a major component of the professional certification requirements and lab work.

Conclusion

The era of manual data processing is officially coming to a close. As companies continue to evolve into data-driven entities, the need for automated and high-quality pipelines will only increase. The DataOps Certified Professional (DOCP) is more than just another certificate; it is your passport to a more efficient and rewarding career in the modern tech world. By mastering these skills, you ensure that your expertise remains relevant and highly sought after on the global stage.

Whether you are an engineer looking to master new tools or a manager striving for better team performance, embracing DataOps is the smartest move you can make today.