Introduction

Synthetic data generation tools create artificial datasets that behave like real data without exposing real people, real transactions, or sensitive records. Instead of copying production tables, these tools learn patterns, relationships, and distributions, then generate safe, usable data for testing, analytics, and machine learning. This matters because teams need faster access to high-quality data, while privacy rules, internal security policies, and risk teams increasingly restrict direct use of production data.

Common use cases include building safe test environments for software releases, creating training data for machine learning models, accelerating QA with realistic edge cases, sharing datasets with partners without leaking sensitive fields, and validating pipelines when production access is limited. When choosing a tool, evaluate data fidelity, privacy risk controls, support for structured and unstructured data, constraint handling, scalability on large tables, integration with pipelines, governance and approvals, ease of use for non-experts, auditability, and total cost of ownership.

Best for: data teams, QA teams, platform engineering, security teams, AI teams, and regulated industries that need realistic data without exposure risk.

Not ideal for: teams that only need tiny demo datasets or simple masked copies where realism and referential integrity do not matter.

Key Trends in Synthetic Data Generation Tools

- Wider adoption of privacy-first data access models to replace direct production cloning

- More focus on measuring privacy risk, not just masking fields

- Stronger support for multi-table relational data with referential integrity

- Increased use of constraint-driven generation for business rules and edge cases

- Synthetic data pipelines moving closer to CI workflows for testing and QA

- Higher demand for explainability, audit trails, and governance approvals

- Growth in domain-specific solutions for healthcare, finance, and public sector needs

- More attention on bias detection and fairness when using synthetic training data

How We Selected These Tools (Methodology)

- Chosen for credibility and adoption across privacy, testing, analytics, and ML use cases

- Included both enterprise platforms and strong open-source options for flexibility

- Evaluated ability to generate realistic multi-table relational datasets

- Considered privacy controls, governance posture, and organizational fit

- Looked at ecosystem maturity, integrations, and practical workflows

- Balanced ease of use with depth for advanced data engineering teams

- Included domain-oriented tools where healthcare-grade patterns are important

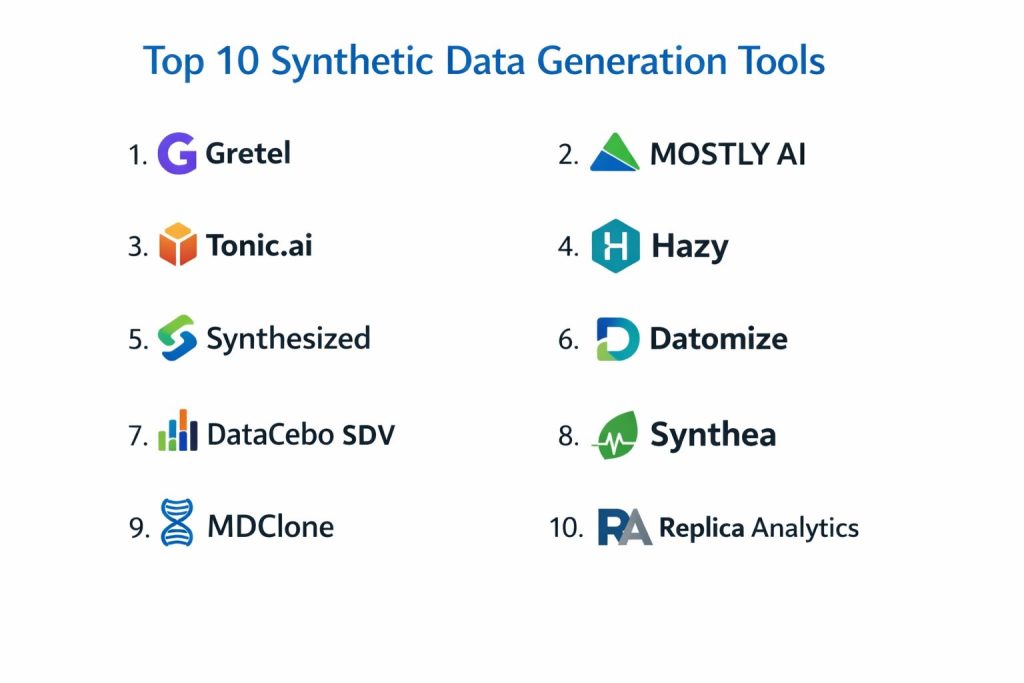

Top 10 Synthetic Data Generation Tools

1 — Gretel

A synthetic data platform focused on creating realistic datasets for ML, analytics, and testing with privacy-aware controls and developer-friendly workflows.

Key Features

- Synthetic generation for structured datasets and tabular workflows

- Configurable privacy and quality controls (varies by setup)

- Support for iterative experimentation and dataset tuning

- Helpful workflows for training data creation and sharing

- Practical features for teams building synthetic data pipelines

Pros

- Strong fit for teams needing synthetic data for ML and testing

- Good balance of usability and configurable controls

Cons

- Advanced governance details may be unclear in public materials

- Best results require careful evaluation of privacy and realism trade-offs

Platforms / Deployment

Cloud, Varies / N/A for other models

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Fits well into data engineering workflows where synthetic datasets are generated, validated, and delivered to downstream environments.

- API-driven automation patterns

- Common pipeline integration approaches

- Works best with defined data contracts and validation checks

Support and Community

Support tiers vary; ecosystem maturity depends on plan and team needs.

2 — MOSTLY AI

A synthetic data generation platform designed for privacy-preserving data sharing and analytics, often used where regulatory caution and governance matter.

Key Features

- Synthetic generation for structured and relational data patterns

- Controls for privacy protection and statistical similarity (varies by setup)

- Support for multi-table datasets and business logic needs

- Practical workflows for governed data access and sharing

- Quality evaluation approaches for usefulness and risk review

Pros

- Strong fit for data sharing and privacy-first analytics

- Useful for regulated environments with governance needs

Cons

- Implementation outcomes depend on data complexity and rules

- Some advanced integration details may require deeper evaluation

Platforms / Deployment

Cloud, Varies / N/A for other models

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Typically used in a governed workflow where synthetic datasets are generated, approved, and distributed to teams safely.

- Pipeline export patterns for analytics and testing

- Workflow integration depends on the environment

- Best paired with clear approval and audit processes

Support and Community

Support tiers vary; community visibility depends on region and industry.

3 — Tonic.ai

A platform focused on creating safe, realistic datasets for software testing and development, often positioned for engineering and QA teams.

Key Features

- Realistic data generation for development and QA workflows

- Constraint handling for common test scenarios and rules

- Repeatable dataset builds for consistent test environments

- Practical controls to protect sensitive values

- Workflow patterns for delivering data to non-production systems

Pros

- Strong for QA acceleration and developer productivity

- Good fit when teams need realistic test environments quickly

Cons

- Some governance and compliance details may not be clearly public

- Realism vs privacy trade-offs require careful validation

Platforms / Deployment

Cloud, Varies / N/A for other models

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Often integrates into engineering workflows where data refresh cycles and test pipelines matter.

- Automation-friendly dataset refresh patterns

- Fits well with CI-style testing practices

- Works best with clear schema and test requirements

Support and Community

Support tiers vary; onboarding experience depends on team maturity.

4 — Hazy

A synthetic data platform focused on privacy-preserving data generation for enterprise use cases, often aligned to financial and regulated settings.

Key Features

- Synthetic data generation for structured enterprise datasets

- Controls designed to reduce re-identification risk (varies by setup)

- Support for data sharing and collaboration workflows

- Practical enterprise alignment for governance-style adoption

- Tools to evaluate similarity and utility (varies by product setup)

Pros

- Strong fit for regulated data sharing scenarios

- Designed for enterprise adoption patterns

Cons

- Tool fit depends heavily on internal governance requirements

- Some deployment and compliance specifics may require direct validation

Platforms / Deployment

Cloud, Varies / N/A for other models

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Typically used as part of a governed data workflow, where synthetic datasets are approved before wider access.

- Common data platform connectivity patterns

- Integration depends on enterprise environment

- Works best with clear risk review steps and metrics

Support and Community

Support is typically enterprise-oriented; community visibility varies.

5 — Synthesized

A data engineering-oriented platform focused on synthetic data, test data management, and privacy-aware dataset creation for development and analytics.

Key Features

- Synthetic generation for structured datasets and testing use cases

- Rule-based constraints and data quality controls (varies by setup)

- Support for relational data dependencies and consistency

- Practical workflows for repeatable dataset provisioning

- Tools for validation and data quality assessment (varies by setup)

Pros

- Good for teams that need repeatable test data with rules

- Useful in data engineering and QA environments

Cons

- Learning curve can rise with complex constraints and schemas

- Some security and compliance specifics may be unclear publicly

Platforms / Deployment

Cloud, Varies / N/A for other models

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Often fits into environments that already use data quality checks and automated provisioning practices.

- Pipeline automation patterns for dataset builds

- Works well with structured schema management

- Integrations depend on surrounding tools and storage platforms

Support and Community

Support tiers vary; adoption strength depends on region and sector.

6 — Datomize

A synthetic data solution typically used for generating realistic datasets for testing, analytics, and safe data sharing, often with privacy considerations.

Key Features

- Synthetic generation approaches for structured datasets

- Privacy-focused transformations and generation controls (varies by setup)

- Support for test data creation workflows

- Tools to help reduce exposure of sensitive attributes

- Practical export patterns for non-production environments

Pros

- Useful for teams focused on safer test data delivery

- Can help accelerate non-production data availability

Cons

- Public detail depth may be limited for some advanced features

- Governance and evaluation approach may require internal validation

Platforms / Deployment

Varies / N/A

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Typically works as part of a broader data workflow rather than a standalone “one-click” solution.

- Integrations depend on environment and target systems

- Works best with clear schema definitions and validation checks

- Pipeline automation can improve consistency and repeatability

Support and Community

Varies / Not publicly stated.

7 — DataCebo SDV

An open-source synthetic data toolkit widely used by data teams to generate synthetic tabular and relational datasets, often valued for flexibility and experimentation.

Key Features

- Synthetic generation for tabular and multi-table relational data

- Model-based generation approaches for realistic distributions

- Configurable workflows for experimentation and evaluation

- Strong fit for teams that want code-first control

- Community-driven ecosystem for extensions and examples

Pros

- High flexibility and strong value for engineering teams

- Transparent, code-driven workflows that are easy to test and version

Cons

- Requires engineering effort for production-hardening

- Governance and compliance controls depend on how you implement it

Platforms / Deployment

Self-hosted, Varies / N/A depending on environment

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Fits well into Python-based data stacks where you want synthetic data generation to be part of pipelines and tests.

- Easy integration into data notebooks and ETL workflows

- Can be wrapped into internal services for repeatability

- Works best with strong validation metrics and monitoring

Support and Community

Strong community presence for open-source users; enterprise-grade support varies by third parties.

8 — Synthea

An open-source synthetic health record generator used to create realistic patient-like data for research, testing, and education in healthcare contexts.

Key Features

- Synthetic patient record generation for healthcare-style datasets

- Configurable modules to model clinical pathways and events

- Useful for training, demos, and pipeline validation

- Supports scenario-driven generation for varied conditions

- Helpful for education and non-production healthcare testing

Pros

- Strong for healthcare demos and safe education datasets

- Open approach makes it easy to customize scenarios

Cons

- Primarily healthcare-focused, not general enterprise data

- Output realism depends on scenario design and configuration effort

Platforms / Deployment

Self-hosted, Varies / N/A

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Often used as a source for healthcare-style synthetic datasets that are later transformed into formats used in analytics tools.

- Works best with clear use-case modules

- Downstream integration depends on target systems

- Useful for pipeline testing without patient exposure

Support and Community

Community-driven support; documentation and user examples are helpful but vary.

9 — MDClone

A synthetic data and data sandbox solution often used in healthcare environments to provide safe, realistic datasets for research, analytics, and operational improvement.

Key Features

- Synthetic data generation aligned to healthcare workflows

- Sandbox-style access patterns for analysts and researchers

- Tools designed to reduce privacy risk for sensitive records

- Support for cohort-style exploration and dataset creation

- Practical governance alignment for regulated environments

Pros

- Strong fit for healthcare analytics and research enablement

- Useful when privacy restrictions block real data access

Cons

- Domain focus may be less suitable for general industries

- Implementation success depends on data quality and governance setup

Platforms / Deployment

Varies / N/A

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Typically used within a governed environment where synthetic datasets are generated for approved teams.

- Integration depends on hospital or research data platforms

- Works best with defined access and approval workflows

- Often paired with analytics tools in controlled environments

Support and Community

Enterprise-oriented support; community presence varies.

10 — Replica Analytics

A synthetic data solution commonly associated with privacy-preserving datasets for analytics, particularly in sensitive domains where sharing real records is risky.

Key Features

- Synthetic dataset generation for sensitive data sharing needs

- Privacy-aware generation and transformation capabilities (varies by setup)

- Support for analytics-focused data delivery

- Controls intended to reduce re-identification risk (varies by setup)

- Practical workflows for safe collaboration

Pros

- Helpful for privacy-first analytics and data sharing scenarios

- Useful when external collaboration requires safer datasets

Cons

- Public technical specifics may be limited in some areas

- Requires careful evaluation of realism, privacy, and utility

Platforms / Deployment

Varies / N/A

Security and Compliance

Not publicly stated

Integrations and Ecosystem

Often used where synthetic datasets must be shared across teams or external partners without exposing sensitive fields.

- Integration depends on storage and analytics environment

- Works best with clear utility targets and privacy thresholds

- Governance processes improve trust and repeatability

Support and Community

Varies / Not publicly stated.

Comparison Table

| Tool Name | Best For | Platform(s) Supported | Deployment | Standout Feature | Public Rating |

|---|---|---|---|---|---|

| Gretel | Synthetic data for ML and testing workflows | Varies / N/A | Cloud | Privacy-aware synthetic generation workflows | N/A |

| MOSTLY AI | Governed synthetic data sharing and analytics | Varies / N/A | Cloud | Enterprise privacy-first data sharing focus | N/A |

| Tonic.ai | Realistic test data for engineering and QA | Varies / N/A | Cloud | Practical test dataset provisioning approach | N/A |

| Hazy | Enterprise synthetic data for regulated environments | Varies / N/A | Cloud | Governance-oriented synthetic data workflows | N/A |

| Synthesized | Test data management with constraints and rules | Varies / N/A | Cloud | Repeatable dataset builds with constraints | N/A |

| Datomize | Safer non-production datasets for teams | Varies / N/A | Varies / N/A | Privacy-focused dataset generation patterns | N/A |

| DataCebo SDV | Code-first synthetic data generation toolkit | Varies / N/A | Self-hosted | Flexible open-source generation workflow | N/A |

| Synthea | Synthetic healthcare record generation | Varies / N/A | Self-hosted | Scenario-driven synthetic patient records | N/A |

| MDClone | Healthcare synthetic data and sandbox access | Varies / N/A | Varies / N/A | Regulated data enablement for research teams | N/A |

| Replica Analytics | Privacy-preserving synthetic datasets for analytics | Varies / N/A | Varies / N/A | Safe data sharing workflows for sensitive data | N/A |

Evaluation and Scoring of Synthetic Data Generation Tools

Weights

Core features 25 percent

Ease of use 15 percent

Integrations and ecosystem 15 percent

Security and compliance 10 percent

Performance and reliability 10 percent

Support and community 10 percent

Price and value 15 percent

| Tool Name | Core | Ease | Integrations | Security | Performance | Support | Value | Weighted Total |

|---|---|---|---|---|---|---|---|---|

| Gretel | 8.5 | 8.0 | 8.0 | 6.5 | 7.5 | 7.5 | 7.5 | 7.80 |

| MOSTLY AI | 9.0 | 7.5 | 7.5 | 7.5 | 8.0 | 7.5 | 6.5 | 7.77 |

| Tonic.ai | 8.5 | 8.5 | 7.5 | 7.0 | 7.5 | 7.0 | 6.5 | 7.65 |

| Hazy | 8.0 | 7.0 | 7.0 | 7.0 | 7.5 | 6.5 | 6.0 | 7.10 |

| Synthesized | 8.5 | 7.0 | 7.5 | 7.0 | 7.5 | 6.5 | 6.0 | 7.30 |

| Datomize | 7.5 | 7.0 | 6.5 | 6.5 | 7.0 | 6.0 | 6.5 | 6.82 |

| DataCebo SDV | 8.0 | 6.5 | 7.0 | 5.5 | 7.0 | 8.5 | 9.0 | 7.47 |

| Synthea | 6.5 | 6.0 | 5.5 | 5.5 | 6.5 | 7.5 | 9.5 | 6.72 |

| MDClone | 8.5 | 7.0 | 7.0 | 7.5 | 7.5 | 7.0 | 6.0 | 7.32 |

| Replica Analytics | 8.0 | 7.0 | 6.5 | 7.0 | 7.0 | 6.5 | 6.5 | 7.05 |

How to interpret the scores

These scores are comparative and help you shortlist tools based on typical buyer needs. A slightly lower total can still be the right choice if it matches your governance model or data domain. Core and integrations tend to drive long-term success, while ease drives faster adoption. Security reflects what a buyer can reasonably validate at evaluation time, but you should still confirm vendor capabilities directly. Use the scores to narrow options, then validate with a pilot using your real schemas and constraints.

Which Synthetic Data Generation Tool Is Right for You

Solo or Freelancer

If you want flexibility and strong value, DataCebo SDV is a practical code-first option when you are comfortable with engineering work and validation. If you work in healthcare demos or learning projects, Synthea can provide domain-shaped data that is safer to share. Solo users should focus on tools that are easy to repeat, easy to version, and easy to validate, because you do not have a large governance team to catch mistakes.

SMB

Small teams typically need quick wins: safe test data, repeatable dataset refreshes, and minimal overhead. Tonic.ai and Synthesized are often aligned to test-data style needs, while Gretel can be a fit when ML or experimentation is important. SMBs should prioritize ease, dataset repeatability, and practical integration into development and QA workflows.

Mid-Market

Mid-market organizations often need stronger governance, consistent approvals, and multi-team sharing. MOSTLY AI and Hazy can be a fit when synthetic data is used to unlock access across departments. Gretel may also work well when product teams and data teams collaborate on synthetic training datasets. Mid-market buyers should prioritize relational fidelity, access controls around outputs, and measurable privacy risk checks.

Enterprise

Enterprise environments usually require auditability, formal approvals, and consistent delivery into many non-production environments. MOSTLY AI, Hazy, and in some domains MDClone are often considered when governance is strict and sensitive data cannot be copied. Enterprises should pay special attention to privacy risk measurement, control of generation settings, data lineage for synthetic outputs, and integration into existing data platforms and identity controls.

Budget vs Premium

Budget-first teams often start with DataCebo SDV or domain-focused open tools like Synthea, then add governance processes internally. Premium platforms may reduce internal engineering load and provide smoother user experiences, but cost must be justified by reduced risk and faster delivery. A good approach is to pilot a premium option against an open-source baseline to see if the productivity and governance gains are real.

Feature Depth vs Ease of Use

If you want deep control and customization, code-first options like DataCebo SDV can be strong, but they demand engineering time and careful validation. If you want easier onboarding and faster time-to-value, managed platforms like Gretel, Tonic.ai, MOSTLY AI, and Synthesized may feel smoother for broader teams. Choose based on who will use the tool daily, not just who approves the purchase.

Integrations and Scalability

Synthetic data only helps if it arrives where teams work. Prioritize tools that can export in the formats your pipelines expect, refresh on schedules, and support multi-table datasets. For scalability, evaluate performance on your largest tables and how well constraints and referential integrity hold under volume. Also validate how generation jobs can be automated so refresh cycles do not become manual bottlenecks.

Security and Compliance Needs

Because many security claims are not publicly detailed, treat security as something you validate during evaluation. Focus on access control to generation projects, separation of roles, auditability of dataset creation, encryption expectations for stored outputs, and how the tool prevents leakage of rare or unique records. In regulated settings, it is often better to accept slightly lower realism if privacy risk is measurably reduced and governance teams can approve the approach confidently.

Frequently Asked Questions

1. What is synthetic data and how is it different from masked data

Synthetic data is newly generated data that mimics the patterns of real data, while masking typically modifies real data fields. Synthetic approaches can reduce exposure risk more, but they still require careful validation of privacy and utility.

2. Can synthetic data be used for software testing

Yes, especially when you need realistic distributions, edge cases, and consistent referential integrity across tables. The key is ensuring the synthetic dataset matches the scenarios your tests rely on.

3. Can synthetic data be used to train machine learning models

It can be used in many cases, but you must validate that the synthetic data preserves the signals your model needs. You should also watch for bias shifts or missing rare patterns that matter in production.

4. How do I measure whether synthetic data is “good enough”

Use utility metrics that match your use case, such as distribution similarity, relationship preservation, and performance of downstream queries or models. Also include privacy risk checks so you do not optimize usefulness at the cost of exposure.

5. What are the biggest risks when using synthetic data

Common risks include leaking patterns tied to unique records, losing critical relationships across tables, and generating unrealistic edge cases. Another risk is treating synthetic data as automatically safe without a privacy review process.

6. How do these tools handle relational databases with many tables

Many tools support multi-table generation, but quality depends on constraints, key relationships, and data complexity. Always pilot using your real schema and verify referential integrity and business rules.

7. Is synthetic data acceptable for regulated industries

It can be, but acceptance depends on risk assessment, governance controls, and measurable privacy protections. You should align with legal and security teams early and document evaluation results clearly.

8. What should a practical pilot look like

Pick one important dataset and define success metrics for utility, privacy risk, and operational workflow. Generate multiple versions, compare results, and run downstream tests so you can measure real impact.

9. How do I avoid common mistakes during implementation

Do not rely on one metric, do not skip constraint testing, and do not ignore rare categories that your business depends on. Establish a repeatable process for generation, validation, and approvals from the start.

10. When should I prefer open-source over a managed platform

Open-source is ideal when you need full control, strong customization, and you have engineering capacity for production-hardening. Managed platforms can be better when speed, broader usability, and governance workflows matter more than deep customization.

Conclusion

Synthetic data generation tools can remove one of the biggest blockers in modern data work: waiting for access to safe, realistic data. The best choice depends on who needs the data, how sensitive it is, and how repeatable your workflows must be. Code-first options such as DataCebo SDV can be excellent when you want flexibility and can invest in validation and internal governance. Managed platforms such as Gretel, MOSTLY AI, Tonic.ai, Hazy, and Synthesized can reduce friction for broader teams and support safer sharing patterns. Domain-focused tools like Synthea and MDClone can help in healthcare-style contexts. A simple next step is to shortlist two or three tools, run a pilot on a real schema, validate relational integrity and privacy risk, and then standardize the workflow for repeatable refresh cycles.