Three Lessons From Test-Driven Development

Source:- securityintelligence.com

In 1999, Kent Beck’s “Extreme Programming Explained: Embrace Change,” became an inspiration for rethinking the way software was developed. Three years later, his “Test-Driven Development: By Example” further elaborated on the need to reconsider the way software is planned, how teams operate and, most importantly, the way software is tested. To date, there are over 170 books on Amazon about test-driven development (TDD).

For readers curious about the origins and evolution of the concept, the Agile Alliance posted a short timeline of the evolution of the concept with a summary of its key points.

Take Your Software for a Spin

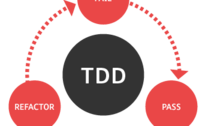

TDD is founded on the basic premise that test programs should be developed before the functionality that is to be tested, also known as test-first development. For example, one should write test code before writing the actual code. With TDD, the test-first concept is supplemented with a focus on refactoring — revising and improving — the code base at every opportunity. In many respects, this is similar to continuous improvement cycles such as the Deming cycle.

For software developers, TDD introduces a mind shift. Instead of assuming the code works based on a few test cases in which the code performed as expected, design a series of assessments to stress test the functionality of your code. After every change to the code base, rerun the battery of tests. So instead of writing code and simply hoping it works, TDD provides developers with strong assurances.

Three Lessons to Take Away From Test-Driven Development

From a cybersecurity perspective, TDD provides us with three applicable lessons.

1. Are the Systems Working the Way They Should?

Without test programs in place, how can an organization provide assurances that its systems are actually operating normally? This concept can be applied in a business operations sphere, at the network and system levels, and in a security operations sphere. Test systems can help analysts determine whether the firewall is still properly configured and whether the intrusion detection system (IDS) is fully operational and capable of generating alerts.

The concept can also be extended to the human resources and management spheres. By testing employees’ and managers’ reactions in the event of an alert, organizations can determine whether the response is appropriate according to documented procedures.

2. Don’t Deploy Another System Until You Know How to Test It

Imagine a world in which you are able to run tests on a new system prior to deploying it into your operational environment. Not only do you have assurances that the new system is working correctly, but you also tested all the interactions this new system is going to have with the rest of your operations.

This lesson is undoubtedly one of the hardest to implement, in terms of both knowledge and resources. From a knowledge standpoint, we are at a point in history where we’re creating and deploying systems that are so complex that it becomes challenging for a single person to know how the system operates and how to test it. From a resource standpoint, putting a system through an appropriately realistic battery of tests would mean having the resources to clone a production environment, complete with test data, test systems, test networks and test security equipment.

While this lesson can be very challenging to fully implement, ignore it at your own peril. There are hundreds of documented cases in which organizations deployed patches or systems and later realized how detrimental this change was to their operating environment. Even worse, this oversight could damage the confidentiality, integrity or availability of the data housed in those environments.

3. Refactoring Your Systems and, Eventually, Your Environment

A decade or two ago, organizations still had hardwired networks, systems and applications. Today, with the advent of infrastructure-as-a-service (IaaS), platform-as-a-service (PaaS) and applications-as-a-service, organizations are able to leverage cloud-based services to reduce costs and improve agility.

However, another benefit of everything running on software is the flexibility with which these systems can be changed. Need to define new segmented network? You can do that in software. Need to connect application A to application B? You can use software as glue.

The first two lessons provide us with tests that our organizations can run to ensure that systems and networks are operating correctly. Armed with this assurance, we can take full advantage of all that is software-defined to reimagine how our IT operational environments are implemented and further refine these environments to improve cyber resilience for the entire organization.

A Decade of Change

TDD was born out of a collective realization that we needed to change the way software was developed and tested. Similarly, the field of cybersecurity has, in the past decade, brought about a change in the way we think about layered defense in the face of determined attackers.

By leveraging these three lessons, we can mirror TDD for software development to create new ways of validating and improving the cyber resilience of our own systems.