Kubernetes Notes- Ranjith

10 Benefits/features of Kubernetes

- Container Orchestration

- Desired State

- Workload distribution

- Self healing Capabilities

- Automated Rollbacks

- Auto Scaling

- Load balancing

- Environment consistency for development through production

- Automated Scheduling

- Speed of deployment

- Ability to absorb change quickly

- Ability recover quickly

- Hide complexity in the cluster

- Bin packing

- Resource utilization

- Secret and config management

How Kubernetes works

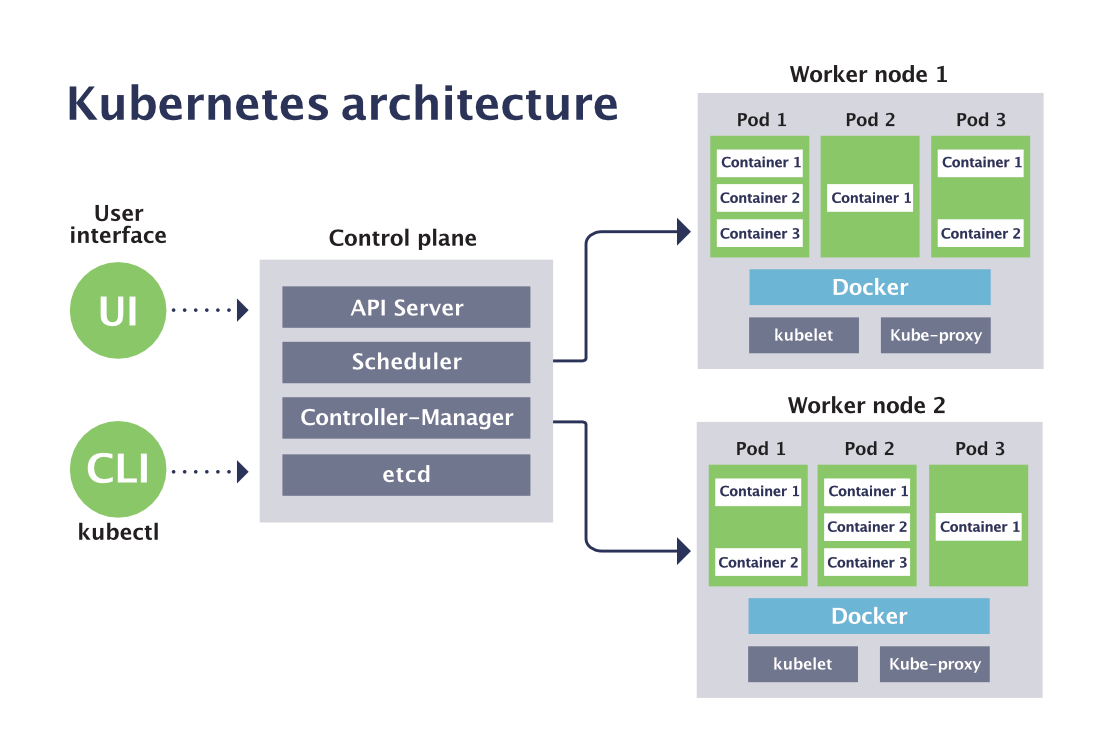

UI or CLI interacts with Kubernetes Master’s (Control Plane) API server. API servers connectts with Controller and etcd for controller allocation and storing the requests. Then Scheduler talks to the Nodes/workers through kubelet on the nodes for distributing the requests. Workers instantiates PODS and under PODS clusters will be created for deploying the application.

Kubernetes the most prominent technology in modern microservices. It is designed to make managing microservices clusters of containerized applications simpler and more automated. Beneath this simple notion is a world of complexity.

One helpful way to think about Kubernetes is as a distributed operating system for containers. It provides the tools and commands necessary for orchestrating the interaction and scaling of containers (most commonly Docker Containers) and the infrastructure containers run on. A general tool designed to work for a wide range of scenarios, Kubernetes is a very flexible system—and very complex.

Kubernetes Works Like an Operating System:

Kubernetes is an example of a well-architected distributed system. It treats all the machines in a cluster as a single pool of resources. It takes up the role of a distributed operating system by effectively managing the scheduling, allocating the resources, monitoring the health of the infrastructure, and even maintaining the desired state of infrastructure and workloads. Kubernetes is an operating system capable of running modern applications across multiple clusters and infrastructures on cloud services and private data center environments.

Like any other mature distributed system, Kubernetes has two layers consisting of the head nodes and worker nodes. The head nodes typically run the control plane responsible for scheduling and managing the life cycle of workloads. The worker nodes act as the workhorses that run applications. The collection of head nodes and worker nodes becomes a cluster.

Components of Master

- kube-apiserver -> Front-end for the Kubernetes

- kube-controller-manager -> Controls the Controllers for Nodes, PODS, endpoints etc., which watches for changes and helps in maintaining desired state

- kube-scheduler -> Schedules or assigns work to Workers/Nodes connecting through kubelet. It keeps watch over the resource capacity and ensures that a worker node’s performance is within an appropriate threshold.

- etcd – Storage -> stores the cluster data. Consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data. requests gets stored in cluster store in key value format powered by etcd

Components of Worker Node

- kubelet – tracks the state of the pod to ensure containers are up and running. main kubernetes agent which registers node with cluster and instantiates pods, exposes endpoint on: 10255

- kube-proxy – routes traffic coming into a node from the service. It forwards requests for work to the correct containers and PODS. Each POD can have multiple containers as required. manages kubernetes networking (pod IP addresses), all containers in a pod share a single IP.

- Docker engine : does container management: pulling images and running containers.

Components of Workstation

- kubectl – allows run commands against kubernetes clusters APIServer.

- creation of json/yaml file

PODs

- Pod is the most basic unit of work. Atomic unit of scheduling.

- Pods run on Workers/nodes in the cluster.

- Containers always run inside of Pods.

- Pod can have one or more Containers.

- All containers in Pod share the same Pod environment.

- Pod is running as long as (at-least) one container associated to that Pod is running.

- Each Pod can have application hosted in container of type. If same application need to deploy in multiple containers then it should be distributed to different Pods. This is because the container share the pod network environment, so same port cannot be deployed in multiple containers of same Pod.

- Pods are never redeployed.

- Pods can be replicated but it will create another copy.

- If a node fails, Pods on the node are automatically scheduled for deletion.

- Pods talk to each other through Pod network.

- Intra-pod communication can be accessed with localhost.

- Pods can communicate with each other by using another pods IP address or by referencing a resource that resides in another pod.

- Phases of Pod status : Pending, Running, Succeeded and Failed.

- Can consider a Pod to be a self-contained, isolated “logical host” that contains the needs of the application it serves.

- Pods are ephemeral. They are not designed to run forever, and when a Pod is terminated it cannot be brought back.